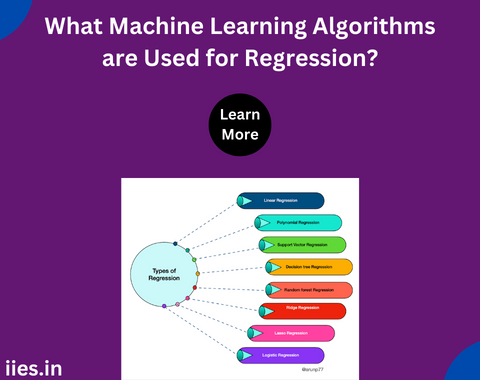

1. Linear Regression:

– Overview: Linear regression is perhaps the most straightforward regression algorithm.

– Application: Widely used in various fields, linear regression is effective when the relationship between variables is approximately linear.

2. Ridge Regression:

– Overview: Ridge regression is an extension of linear regression that includes a regularization term. It is particularly useful when dealing with multicollinearity (high correlation between independent variables).

– Application: Commonly employed when there are multiple correlated features in a dataset, preventing traditional linear regression from producing reliable results.

3. Lasso Regression:

– Overview: Similar to ridge regression, lasso regression introduces regularization, but with a slight difference. Lasso tends to produce sparse models by driving some of the coefficients to exactly zero.

– Application: Useful for feature selection, especially when dealing with datasets with a large number of features.

4. Decision Tree Regression:

– Overview: Decision trees divide the dataset into subsets based on the values of independent variables, creating a tree-like structure. Decision tree regression predicts the target variable based on the average of the target values in the corresponding leaf node.

– Application: Effective when the relationship between variables is nonlinear or when dealing with categorical data.

5. Random Forest Regression:

– Overview: Random forests build multiple decision trees and combine their predictions to enhance accuracy and reduce overfitting.

– Application: Suitable for large datasets with complex relationships between variables, providing robust predictions and feature importance rankings.

6. Support Vector Regression (SVR):

– Overview: SVR is an extension of support vector machines for regression tasks. It aims to find a hyperplane that best represents the relationship between variables.

– Application: Particularly effective when dealing with datasets with a high dimensionality.

7. K-Nearest Neighbors (KNN) Regression:

– Overview: KNN regression predicts the target variable by averaging the values of its k-nearest neighbors in the feature space.

– Application: Useful when there is a spatial or temporal pattern in the data, and the target variable is influenced by nearby data points.

8. Gradient Boosting Regression:

– Overview: Gradient boosting builds an ensemble of weak learners (typically decision trees) sequentially, each correcting the errors of its predecessor.

– Application: Powerful for creating accurate models, especially when dealing with large datasets.

9. Neural Network Regression:

– Overview: Neural networks, especially those with a single output node, can be used for regression tasks. They learn complex relationships between variables through layers of interconnected nodes.

– Application: Suitable for tasks where the relationships are highly nonlinear and the dataset is sufficiently large.

10. Elastic Net Regression:

Overview: Elastic Net combines the regularization techniques of both Ridge and Lasso regression, providing a balanced approach to handle multicollinearity and perform feature selection.

Application: Well-suited for datasets with a large number of features and potential collinearity issues.

11. Huber Regression:

Overview: Huber regression is a robust regression algorithm that minimizes the impact of outliers by using a combination of the squared error and absolute error.

Application: Effective when dealing with datasets containing noisy or skewed data points.

12. Quantile Regression:

Overview: Quantile regression focuses on estimating the conditional quantiles of the dependent variable, providing a more comprehensive understanding of the data distribution.

Application: Useful when the assumptions of normality and homoscedasticity are not met.

13. Principal Component Regression (PCR):

Overview: PCR combines principal component analysis (PCA) and linear regression. It projects the original features into a lower-dimensional space before applying regression.

Application: Helpful when dealing with multicollinearity and a high number of features.

14. Gaussian Process Regression:

Overview: Gaussian Process Regression is a non-parametric Bayesian approach that models the entire distribution of possible functions rather than a specific one.

Application: Suitable for cases where the underlying relationship is complex and uncertain.

15. XGBoost Regression:

Overview: XGBoost is an optimized implementation of gradient boosting that has gained popularity for its speed and accuracy, especially in structured/tabular data.

Application: Widely used in competitions and real-world scenarios for its robust performance and ability to handle missing data.