What Is Pipeline Command in Linux?

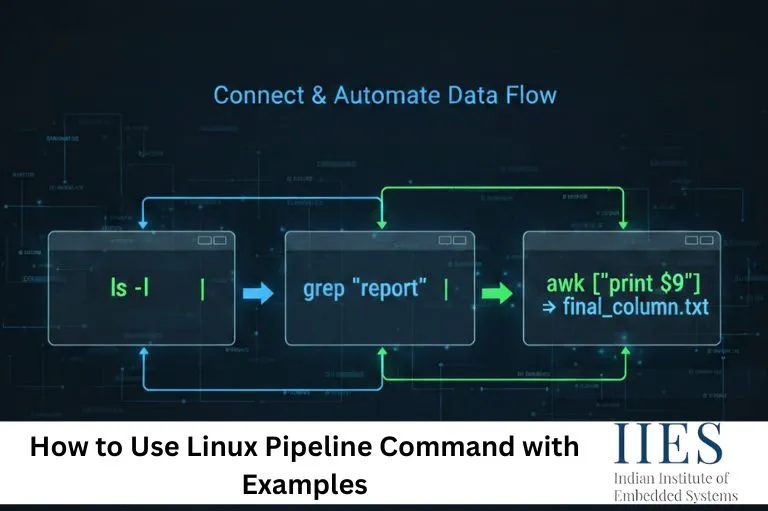

A pipeline in Linux is a combination of two or more commands connected using the pipe operator (|), where the standard output (stdout) of one command is passed directly as the standard input (stdin) of the next command.

Linux Input/Output Streams

Pipelines operate using Linux I/O streams:

- Standard Input (stdin – file descriptor 0)

- Standard Output (stdout – file descriptor 1)

- Standard Error (stderr – file descriptor 2)

When commands are connected with a pipeline, they execute concurrently, allowing data to flow smoothly from one process to another without being written to disk.

Why pipelines matter

They follow the Unix philosophy — build complex tasks using small, simple, and reusable tools.

Uses of Pipelines in Linux

Linux pipelines are commonly used for:

- Filtering and searching text data

- Processing and formatting files

- Sorting and aggregating output

- Log file analysis

- Monitoring system processes

- Automating administrative tasks

- Generating system and performance reports

Syntax of Pipeline (|) Operator

Basic Syntax:

command1 | command2 | command3 | ...

Explanation:

- command1 generates output

- command2 filters or processes that output

- command3 further modifies the result

Each command in the pipeline performs a single, focused task, making pipelines modular and readable.

Linux Pipe Command Examples (Basic)

Example 1: Display Only Matching Files

ls | grep ".txt"

Lists all files and directories and displays only files ending with .txt.

Use case: Quickly filter files by extension.

Example 2: Count Files and Directories

ls | wc -l

Counts the total number of files and directories in the current directory.

Why this works: ls sends output → wc -l counts the number of lines.

Using Pipe Operator in Linux

The pipe operator (|) enables Linux command chaining, allowing multiple commands to work together.

Example: Filter Running Processes

ps aux | grep root

Displays all running processes and filters those owned by the root user.

To avoid matching the grep command itself:

ps aux | grep [r]oot

Example: View Recent Command History

history | tail -10

Displays the last 10 executed commands.

Why useful: Great for auditing or recalling recent terminal activity.

Linux Pipeline Examples with grep, awk, and sed

Using grep in a Pipeline

dmesg | grep error

Searches kernel log messages for lines containing the word error.

Use case: System troubleshooting and log analysis.

Using awk in a Pipeline

ls -l | awk '{print $9}'

Extracts and displays only file names from a long directory listing.

Why awk: It works column-wise, making it ideal for structured output.

Using sed in a Pipeline

cat file.txt | sed 's/Linux/UNIX/g'

Replaces all occurrences of Linux with UNIX in the output.

Note: The original file remains unchanged.

Combined grep, awk, and sed Example

ps aux | grep apache | awk '{print $2}' | sed 's/^/PID: /'

This pipeline:

- Filters Apache processes

- Extracts process IDs

- Formats the output

Multiple Pipes in Linux

Linux allows multiple pipe operators to be used in a single command.

Example:

ps aux | grep apache | awk '{print $2}' | sort -n

What This Does:

- Displays all running processes

- Filters Apache-related processes

- Extracts PIDs

- Sorts them numerically

Multiple pipelines make complex processing efficient and readable.

Using tee Command in Linux Pipelines

The tee command reads from standard input and writes to:

- Standard output

- One or more files

Syntax:

command | tee filename

Example:

ls | tee output.txt

Displays the directory listing and saves it to output.txt.

Example with Pipeline:

df -h | tee disk_usage.txt | grep "/dev"

- Saves full disk usage to a file

- Displays only device-related entries

To append instead of overwrite:

command | tee -a filename

Xargs Pipeline Examples

The xargs command converts standard input into command-line arguments.

Example: Remove Log Files

find . -name "*.log" | xargs rm

Safe version (handles spaces):

find . -name "*.log" -print0 | xargs -0 rm

Count Lines in Multiple Files

ls *.txt | xargs wc -l

Redirect Output in Linux with Pipeline

Redirect Output to a File:

ps aux | grep root > output.txt

Saves the filtered output to a file.

Redirect Output and Errors:

command 2>&1 | tee error.log

Redirects both stdout and stderr to a file while displaying them.

Practical Linux Pipeline Examples

Example 1: Find Largest Files and Directories

du -ah . | sort -rh | head -10

Displays the top 10 largest files and directories.

Example 2: Monitor System Processes

top -b -n 1 | head -20

Displays the top running processes in batch mode.

Example 3: Count Word Frequency

cat file.txt | tr ' ' '\n' | sort | uniq -c | sort -nr

Counts how often each word appears in a file.

Advantages of Using Linux Pipelines

- Faster command execution

- No need for temporary files

- Reduced disk I/O

- Efficient memory usage

- Simplifies automation and scripting

- Encourages modular command usage

Common Mistakes in Pipeline Commands

- Using commands in the wrong order

- Ignoring standard error output

- Not handling filenames with spaces

- Overusing cat unnecessarily

- Forgetting performance considerations

Example of Unnecessary cat:

cat file.txt | grep error

Better:

grep error file.txt

Interview Questions on Linux Pipeline

- What is a pipeline in Linux? A pipeline connects multiple commands using the pipe operator so the output of one command becomes the input of another.

- What is the use of the pipe (|) operator? It allows efficient command chaining and real-time data processing.

- What is the difference between pipe and redirection? A pipe sends output to another command, while redirection sends output to a file.

- Can we use multiple pipes in Linux? Yes, Linux supports unlimited pipelines.

- What is the tee command used for? To display output on the terminal and save it to a file simultaneously.

- Explain an xargs pipeline example. xargs converts standard input into arguments for another command, enabling batch processing.

Conclusion

The pipeline command in Linux is a core concept for efficient command-line usage. By mastering pipelines — including multiple pipes, grep, awk, sed, tee, xargs, and output redirection — users can perform complex tasks using simple, readable commands. A strong understanding of Linux pipeline examples is essential for anyone working with Linux systems in real-world environments, especially system administrators, developers, and DevOps engineers.