1. Supervised Learning

Supervised learning is one of the most common types of machine learning. In this approach, the algorithm is trained on a labeled dataset, meaning that each training example is paired with an output label. The goal is for the model to learn a mapping from inputs to outputs that can be applied to new, unseen data. Supervised learning can be further categorized into:

- Regression: Predicting a continuous value. For example, predicting house prices based on features like size, location, and age.

- Classification: Predicting a discrete label. For example, determining whether an email is spam or not.

2. Unsupervised Learning

Unsupervised learning deals with unlabeled data. The algorithm tries to learn the underlying structure of the data without guidance on what the output should be. Common techniques include:

- Clustering: Grouping data points into clusters based on similarity. For example, customer segmentation in marketing.

- Dimensionality Reduction: Reducing the number of features while preserving the essence of the data. Techniques like Principal Component Analysis (PCA) are used for this purpose.

3. Semi-Supervised Learning

This approach is a hybrid of supervised and unsupervised learning. It uses a small amount of labeled data and a large amount of unlabeled data. The labeled data helps guide the learning process, which can improve the performance of the model when it’s difficult or expensive to obtain a fully labeled dataset.

4. Reinforcement Learning

Reinforcement learning is inspired by behavioral psychology. An agent learns to make decisions by performing actions in an environment to maximize some notion of cumulative reward. This type of learning is particularly effective for tasks where decision making is sequential, such as game playing, robotics, and autonomous driving.

5. Overfitting and Underfitting

- Overfitting: Occurs when a model learns the training data too well, including its noise and outliers, which negatively affects its performance on new data. The model becomes too complex and fails to generalize.

- Underfitting: Happens when a model is too simple to capture the underlying patterns in the data, leading to poor performance on both the training and new data.

6. Model Evaluation and Validation

To ensure that a machine learning model performs well on unseen data, it’s essential to evaluate and validate it. Common techniques include:

- Train/Test Split: Dividing the dataset into a training set and a test set.

- Cross-Validation: Dividing the data into multiple folds and training/testing the model on different folds to ensure stability and robustness.

- Evaluation Metrics: Metrics such as accuracy, precision, recall, F1 score, and ROC-AUC are used to assess the model’s performance.

7. Feature Engineering

Feature engineering involves selecting, modifying, and creating new features from raw data to improve the performance of machine learning models. Good feature engineering can significantly enhance the predictive power of a model. This process may include:

- Normalization/Standardization: Adjusting the scale of features.

- Encoding Categorical Variables: Transforming categorical variables into numerical values.

- Creating Interaction Features: Combining features to capture additional information.

8. Model Selection

Choosing the right model is crucial for solving a given problem effectively. Different algorithms have varying strengths and weaknesses depending on the nature of the data and the task. Commonly used models include:

- Linear Regression: For regression tasks.

- Logistic Regression: For binary classification tasks.

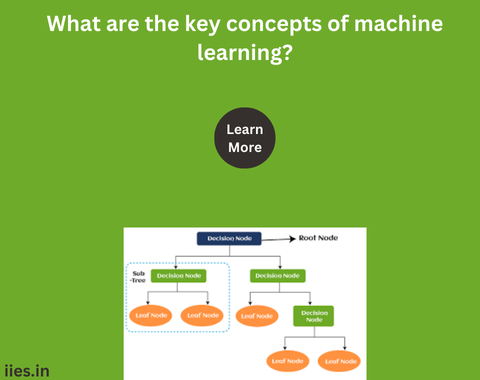

- Decision Trees: For both classification and regression tasks.

- Support Vector Machines (SVM): For classification tasks.

- Neural Networks: For complex tasks requiring deep learning.

9. Hyperparameter Tuning

Hyperparameters are settings that need to be specified before training a model, such as learning rate, number of trees in a random forest, or number of layers in a neural network. Tuning these hyperparameters is crucial for optimizing model performance. Techniques like grid search and random search are commonly used for this purpose.

10. Ensemble Learning

Ensemble learning involves combining multiple models to improve overall performance. The idea is that by aggregating the predictions of several models, the ensemble can achieve better results than any single model. Common ensemble methods include:

- Bagging: Building multiple models from different subsets of the training data (e.g., Random Forest).

- Boosting: Building models sequentially, each trying to correct the errors of the previous one (e.g., Gradient Boosting Machines).

Conclusion

Understanding these key concepts of machine learning provides a solid foundation for exploring and mastering the field. As the field continues to grow and evolve, staying updated with the latest advancements and continually practicing with real-world data will be essential for anyone looking to become proficient in machine learning.